The Hype Cycle and Perfect Prediction Horizons

We tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run.

So declared Roy Amara in 2006. Futurists have since been wrestling with this law and its implications.

Amara’s Law implies that between the early disappointment and the later unanticipated impact at scale there must be a moment when we get it about right.

I reckon this Perfect Prediction Horizon is 15 years in the future.

We expect too much of an innovation in the first ten years. We foresee too little impact in the first twenty years and thereafter. But our intuitions tend to be about right at that 15 year time horizon.

The Hype Cycle captures this (and perhaps little else) well.

Dad-tactics before the birth

Being a new Dad is busy. It’s tiring. It means being always on and always available. Being interrupted is your new default state. Your longest available free time will be measured in minutes. It will be unscheduled.

Although more daunting, all the parenting things will take care of themselves.

Instead, I wish I had received advice on how to best make use of my time before the birth. And how to prepare my daily life to the fragmented tempo of being a parent.

In short:

doing any time or attention intensive projects and planning upfront

setting up my habits to being modular and adaptable

mentally preparing for this new interrupted mode

squeezing in some last minute responsibility-free fun.

For me, this would mean:

Get any strategic thinking done now. Be that career, family, finances or side projects. You will not have brain space to do so for at least 6 months. Your preferences and outlook will shift after the birth. Do the building blocks of strategic planning now and adapt later. We did a lot of career and family decision-making on the fly that added undue stress.

Write down your plan. You probably have some plans for how you would like to parent. In the fog of war, these plans will be forgotten. Write it down. Make it short; make it simple. You will be in reaction mode for 6 months and will fall into the habits of whatever’s easiest or pushed on you by grandparents, aunts and nurses.

Get fit. It’s a base from which to build. I did the sympathy pregnancy thing and transformed into a fat fool. Plant the seeds of a fitness habit that has a minimal dose you can do while sleep deprived and at short notice during brief, unscheduled windows. Walking the buggy is good, but you need to sweat; I didn’t do this until much later.

Put your finances in order. Being explicit about your financial situation will be a source of security for you both; mainly for herself.

Read books. Especially hard ones. You’ll not be reading many of these again any time soon. They’ll sit there taunting you on the bookshelf, while the little one taunts you from the playmat.

Get away. Lads trips will be a very rare commodity. Get one in now even if you don’t feel like it. I wasn’t in the headspace for one and thought I had to stick around Adeline for support. Get debauched.

Savour being ‘carefree’. Enjoy doing things at your own pace. Enjoy doing things last minute. Enjoy being accountable to ‘almost’ no-one. Drive to Dundee in your bare feet.

Read parenting books in advance. You won’t be reading these on the battlefield. They’re mostly bollocks; they’re all long-winded and boring. Write short actionable notes.

Think strategically about career. Make any career moves now that will give you more flexibility when the little one arrives. Identify what work you can do on autopilot and what requires more mental bandwidth. Work, post-baby is different. I don’t know how anyone does it.

Get any creative work done. Unless creative work is your bread and butter, it will be increasingly hard to carve out time and mental space for deep work. As I said - autopilot.

Figure out how to quickly decompress. You will need to do this. This could be meditation, pushups, short walks, whatever. Having a go-to tactic in your pocket will help. Trying to find what works when you already need it is not ideal.

Stretch. I put out my back, changing Luca’s nappy on day 3. Partially from moving house a fortnight before, partially from sitting in a hospital chair overnight, almost entirely from having the flexibility of marble pillars.

Finish any projects or permanently shelve them. If there’s any large loose threads, either tie them off or leave them go. You don’t need that mental baggage. It’s looking increasingly unlikely that I’ll be lining out for Man United. I’ve put an end to practicing my autograph. I feel a lot lighter.

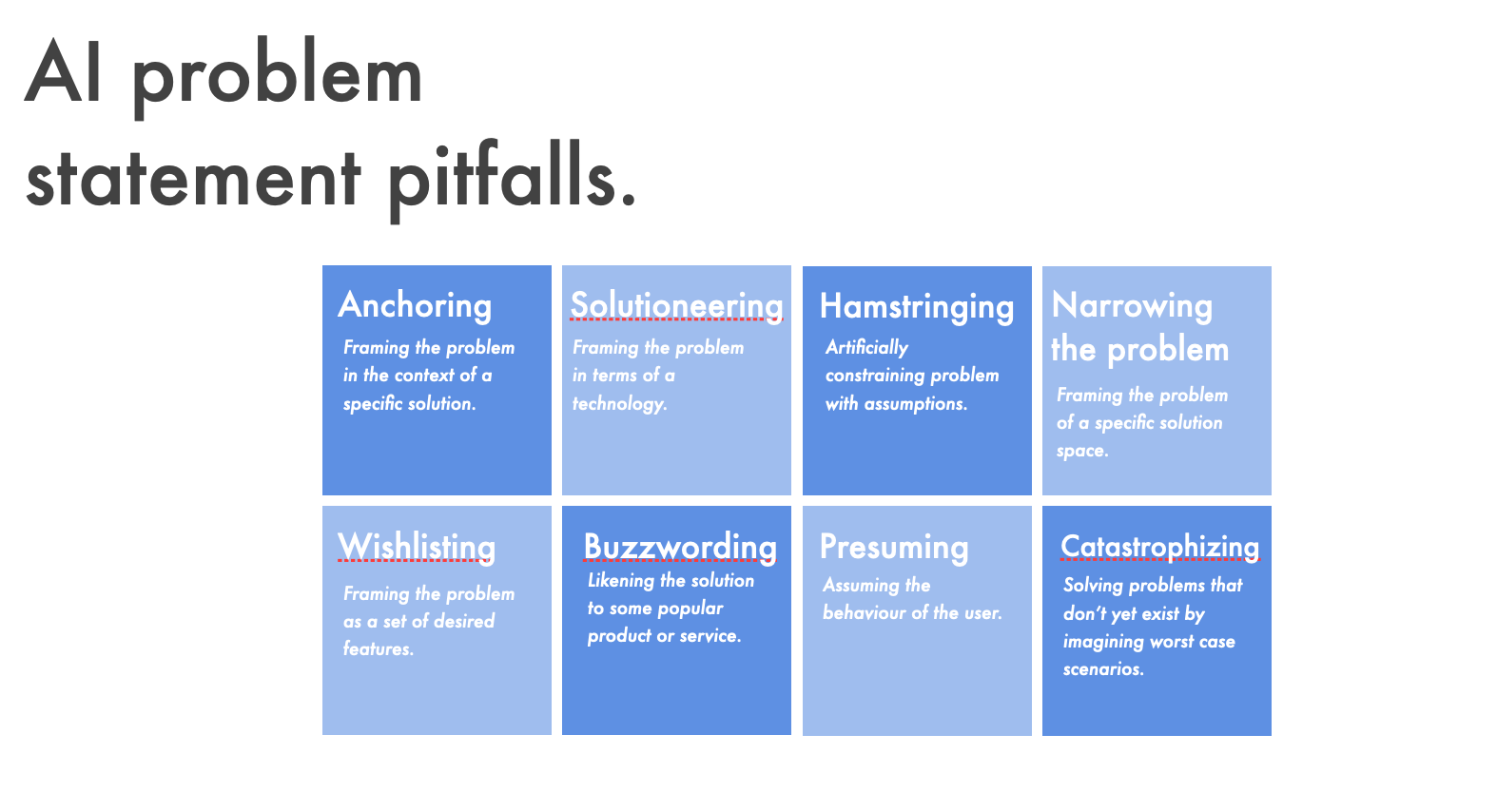

AI Problem Statements

A problem statement is a tool to help guide your team toward building the right product. Defining AI problem statements well is important in pointing a path to a worthwhile AI product.

It’s easy to solve the wrong problems. Good design relentlessly questions assumptions, reframing the design problem to be solved. We know this, and yet, HOW to actually reframe a problem is missing from our conversations. - Stephen Anderson

AI problem statements fall into one of two traps.

Too broad to be meaningful, e.g. talking about value to users, adding intelligence, or automating X.

Too prescriptive so that we venture into the 'how to' territory.

It is quite an art to thread the line between open-ended yet focused without drifting toward excessively broad or overly prescriptive. It is an art worth practicing.

But first, some nuance. Stating that a problem is an AI problem is prescriptive in and of itself.

Nevertheless, here are some rules of thumb that I’ve found useful in avoiding bad problem statements when designing products that are likely to include AI.

These pitfalls can confront any product. However, they are particularly prevalent in AI products. Interestingly, they appear especially jarring to seasoned product managers moving into AI for the first time. These pitfalls are taken from a more exhaustive list by Stephen Anderson.

I’ve updated that list with some AI-specific examples.

Avoiding these eight pitfalls should help you to navigate the path between broadness and prescription. They are worth remembering.

A final word of encouragement: many great products have shipped despite imperfect problem statements. If you find yourself agonising over very minor details in the wording of the problem statement, stop.

It is the product that will be evaluated, not the problem statement.

The problem statement is simply a tool to get you there.

— — —

Sources & inspiration

Stop doing what you're told by Stephen Anderson.

Practical Design Discovery by Dan Brown.

Bad Problem Statements by Stephen Anderson.

Bad AI Problem Statements by Simon O’Regan

Work with Me

For product managers, designers, executives and founders who are wrestling with the ambiguity of crafting compelling and focused AI problem statements, I offer occasional 1:1 consulting.

We’ll work together to quickly get you a selection of problem statements to bring back to your business.

If this can help you to quickly get unblocked, please leave your details below.

UX Design Teams and AI

UX design teams and Machine Learning are getting acquainted. But the relationship is still in its early stages.

In Dove et al. 2017, the contributions of 27 UX design teams to novel machine learning projects is investigated. How did the UX team impact the development of an AI product and what was their role in the process?

The involvement of the design team was broadly categorised as:

Gave an interactive form to a machine learning idea that came from others (e.g. engineers or data scientists)

Generated a novel design concept utilising machine learning, which was presented and then selected for integration into a new product or service.

The UX design team collaborated with engineers, product managers or others, and jointly developed an idea for a new product or service that utilised machine learning.

Broadly speaking, this amounts to 1. UXers coming in after the fact to smooth things over and make the product usable, 2. design teams understanding ML well enough to come up with novel and feasible ideas, 3. design teams requiring partnership with data scientists.

To me, this represents a surprisingly good return for design teams. The ‘desirable’ approaches tally at 19, or 70% of the teams surveyed.

Even the typically lamented category 1 which could be pragmatically translated as ‘the design team was pulled in late to make the AI product usable’ remains a non-ideal but somewhat inevitable result of a world where UX is not completely embedded in development and business teams.

The paper goes into detail about the challenges that designers experience when working with AI. Especially, the constraints of working with this new, poorly-understood design material.

That designers working in AI should have a deep familiarity with the subject is a no-brainer; albeit non-trivial to realise.

To me, what’s more interesting and unfortunately not covered in the paper, is how the designers experiences play out in the joint sessions. What happens within those sessions and why is this collaborative method not as rosy as it might first appear.

Knowledge distributions

It’s not controversial to say that collaborative product development is desirable. But it’s not as simple as getting everyone in the same room. Or even of getting the right people in the room.

Where the knowledge of AI sits has a profound effect on the quality of the session. Does everybody in the room have a good understanding of AI? Or is expertise cleanly split between those who ‘get’ the algorithms and those who ‘get’ the user. A room with individuals with a healthy overlap of shared knowledge far more likely to generate plausible, attractive ideas than one where knowledge sits in discrete groups.

The more that UX of AI knowledge sits within individual brains, the better.

The best thinking is done between synapses rather than in the air between two minds. To generate the spark of collaborative genius requires the individuals to understand each other. With too much friction, too much translation between the participants, ideas cannot flow and collaboration turns to committee meeting.

Trust

Shared understanding has benefits beyond the quality of individual idea generation. Shared understanding builds trust.

Creativity demands that individuals feel safe. That perception of safety can quickly evaporate when participants feel judged, or isolated from others in the room. One way that this can fester is when participants have very different points of view. I’ve seen creative confidence dissipate before my eyes when seemingly ‘dumb’ suggestions have been put forward by those unfamiliar with AI or when a team of engineers betrays a disregard for the experience of the user.

Getting UX of AI knowledge into more individual brains boosts the shared understanding in the group and creates an environment where good ideas are more likely to flow.

— — —

And so the challenge is to transport that intimate knowledge of AI into the minds of designers. Not so that they can work alone, but rather so that design sessions can capitalise on the combinatorial power of Yes! And, and the friction coefficient of ideation sessions goes to zero.

— — —

Sources & inspiration

Books of 2020

Here’s a selection of recommended books that I read and finished in 2020 and that map to the topics covered in The Deployment Age.

Recommended books

The Old Way (re-read). Elizabeth Marshall Thomas

A Culture of Growth. Joel Mokyr

The Awakening: A History of the Western Mind AD 500 - AD 1700. Charles Freeman

Making the Modern World: Materials and Dematerialisation. Vaclav Smil

The Sovereign Individual. James Davidson and William Rees-Mogg

Scalable Innovation. Shteyn and Shtein

Possible Minds. John Brockman

How to Speak Machine. John Maeda

Men, Machines and Modern Times. Elting E. Morison.

This Idea is Brilliant. John Brockman

Deep Learning. Goodfellow, Bengio & Courville.

The Beginning of Infinity (re-read). David Deutsch

The Evolution of Technology. George Basalla

How Innovation Works. Matt Ridley

The Old Way: A Story of the First People (re-read). Elizabeth Marshall Thomas

One of my favourite books. A rich ethnography of the Bushmen of the Kalahari and by extension hunter-gatherer societies of the past.

Elizabeth Marshall Thomas was nineteen when her father took his family to live among the Bushmen of the Kalahari. The book, written fifty years later, recounts her experiences with the Bushmen, one of the last hunter-gatherer societies on earth. This, more than any other book I’ve read, provides a rich link to the origins of human society.

A Culture of Growth: The Origins of the Modern Economy. Joel Mokyr

A keystone text of Progress Studies. Mokyr explores the Enlightenment culture that sparked the Industrial Revolution. Why exactly did this movement lead to a wave of innovations in Europe that went on to trigger the Industrial Revolution and sustained spread of global economic progress.

The Awakening: A History of the Western Mind AD 500 - AD 1700. Charles Freeman

A thorough history of European thought, inquiry and discovery from the fall of Rome in the fifth century AD to the Scientific Revolution.

Making the Modern World: Materials and Dematerialisation. Vaclav Smil

Heavy enough going, like most of Smil’s books. Here, he considers the principal materials used throughout history, from wood and stone, through to metals, alloys, plastics and silicon, describing their extraction and production as well as their dominant applications. The energy costs and environmental impact of rising material consumption are examined in detail.

The Sovereign Individual: Mastering the Transition to the Information Age. James Davidson and William Rees-Mogg

Surprisingly relevant in 2020. Written in the 90s, this long prediction paints a picture of the shift from an industrial to an information-based society. The transition, will be marked by increasingly liberated individuals and drastically altered patterns of power in government, finance and society.

Scalable Innovation. Shteyn and Shtein

A thorough, believable model for the innovation process. The book takes a Systems Thinking approach to problem discovery and evaluation.

Possible Minds: 25 Ways of Looking at AI. John Brockman

Twenty-five essays on AI from the usual suspects at Edge.org. Constraining the topic to AI makes this perhaps the best of the Edge books. Plenty to ponder here.

How to Speak Machine: Computational Thinking for the Rest of Us. John Maeda

A sharp, accessible musing on computation by designer and technologist, John Maeda. He offers a set of simple laws that govern computation today and how to think about its future.

Men, Machines and Modern Times. Elting E. Morison.

A series of historical accounts that demonstrate the nature of technological change and society's reaction to it. Begins with resistance to innovation in the U.S. Navy following an officer's discovery of a more accurate way to fire a gun at sea; continues with thoughts about bureaucracy, paperwork, and card files; touches on computers; tells the unexpected history of a new model steamship in the 1860s; and describes the development of the Bessemer steel process.

The lessons and relevance to the deployment of technology in the 2020s are surprisingly apt.

This Idea is Brilliant. John Brockman

Lost, overlooked, and under-appreciated scientific concepts from the minds at Edge.org. Most of the concepts were not new to me, but the accompanying essays and explanations were excellent. An idea generation goldmine.

Deep Learning. Goodfellow, Bengio & Courville

An excellent overview of Deep Learning from some of its key contributors. Simple accessible language. Surprisingly engaging coverage of linear algebra and probability.

The Beginning of Infinity: Explanations that Transform the World. (Re-read) David Deutsch

Another of my favourite books. Deutsch offers an optimistic view on human knowledge and its importance in the grand scheme of things. An ode to explanations.

The Evolution of Technology. George Basalla

An evolutionary theory of technological change. An argument for gradual change and against the heroic individual.

How Innovation Works. Matt Ridley

Matt Ridley’s ode to innovation as the main event of the modern age. An argument for incremental, bottom-up innovation.

— — —

How I Read

I learned to read in the usual way.

Find a book that looked interesting, start the book, read through sequentially to the end. Read as many as you can. Never read a book twice.

Clearly, this is straight-forward. Clearly, this is bollocks.

Over time I relaxed some of these behaviours. I re-read some books: either favourites or ones where I felt a second pass would reveal knowledge that I was unable to see on first reading.

I’ve never really read the foreword or acknowledgements and rarely do much more than skim the intro. Footnotes are strictly optional. Depending on the topic or author they can be uniformly ignored, gobbled down greedily or more typically, sampled at will like a bit of tiny textual tapas.

I’ve always read multiple books in parallel.

With ten or twenty books on the go, reading through them sequentially meant that some books were in-flight for more than 7 or 8 years. That had to stop; it’s simply too many open loops, too much work-in-progress.

To close those loops, I tackled the tyranny of sequential reading.

Nowadays, I treat books like a blog archive. I browse the table of contents and go immediately to the chapters that pique my interest. If those chapters are sufficiently good, I might go back to the beginning and the paradigm of usual reading. If they’re reasonably good but require reading the previous material, I’ll go back as far as the nearest interesting jumping-in point.

This is quite clearly the behaviour of someone raised in the internet age. For someone who learned to read pre-internet, it’s also obviously guilt-inducing. Nevertheless, it serves me well.

This is why I created some Principles for Reading. The intention is that I re-read these regularly, to make sure I’m not falling back into old patterns of behaviour that no longer serve me well.

Principles of Reading

The Chapter is the unit of currency. Sequence is strictly optional.

Finishing a book does not mean reading to the end. It means extracting what you need from it.

Drop a book that is not making your life better.

Footnotes are optional. Skipping ahead is allowed.

Spend an internet search session with the Bibliography.

Don’t read the fluff at the start.

If in doubt, buy the book.

Don’t hesitate to buy hardcopy and kindle version of the same book.

Sources, Inspiration & Notes

Most of these habits were lifted from others. Who, I don’t recall, but likely some combination of Tyler Cowen, Paul Graham, Kevin Kelly, Tim Ferriss, Tiago Forte, David Perrell, Nassim Taleb.

None of this applies to fiction, bar the rule to mercilessly drop books that are tedious.

The Experiential Futures Ladder

Experiential futures design situations and stuff from the future to catalyse insight and change.

Most futures practices and writing centre on a high level of abstraction. Mostly narrative, diagrams, descriptions. Experiential futures explore more concrete manifestations of futures. In doing so, they give a tangible experience to interact with and a real feel for what that future implies.

To be effective, an experiential future does not create a moment or an artefact from a random future in isolation. Instead, the experience is deliberately oriented within a hierarchy of future details.

The Experiential Ladder is a tool invented by Stuart Candy and Jake Dunagan that aids designers in this orientation exercise.

To begin, a Setting is defined; this is type of future under exploration.

From this generic image of the future a Scenario is selected. This is a specific narrative and sequence of events.

Within this scenario, a Situation is explored. This situation details the circumstances in which we encounter this future. It’s the particular events that us as the audience will experience in physical form at 1:1 scale in various media.

Each of these rungs of the ladder, direct us toward the physical Stuff that we interact with.

These layers orient participants to the elements of the process that are fixed (e.g. broad theme, the location, and the timing). That foundation creates a shared understanding of the future, and a starting point from which to explore the experience.

By narrowing down the arena for creativity to the open questions of this future , attention can be directed to the experience at hand and away from the infinite, inconsistent possibilities of an untethered experience.

Sources

Designing an experiential scenario: The People Who Vanished by Stuart Candy and Jake Dunagan

The Limits of Rapid Prototyping in AI

Rapid prototyping tests the human consequences of design ideas. It does so, quickly.

In essence, it is a design workflow that consists of ideation, prototyping, and testing where the iterations take place in quick succession.

These prototypes are the central artefact of the process and provide a tangible context to assess the intended and unintended consequences of a proposed solution.

The benefits are best seen in practice:

How these prototypes are used indicates whether the solution is suitable and points in the right direction or whether we’ve missed the mark and need further iterations. Often, they lead to suggestions for better adaptions, or entirely new design directions.

The act of generating new solutions in near real-time materially changes what it means to design and shapes the types of solutions that are experimented with. Quantity has a quality all of its own.

Rapid prototyping has limits. And these limits are tested in designing AI prototypes.

Rapid prototyping breaks down under two conditions:

if prototype generation isn't fast

if the prototypes are not sufficiently representative of the end solution to test user behaviour.

Unfortunately, AI prototypes tend toward both error modes.

Speed

The speed of prototype generation and assessment allows for rapid exploration of the solution space.

Smaller investments in potential solutions allow more ideas to be tested. Importantly, reducing the upfront cost of testing ideas leads to less conservative, more outlandish ideas being tested. The Search Space is broadened.

At the same time, rapid prototypes inoculate against over-investment in unfruitful design directions. It is easy to throw something cheap away; sunk costs are smaller and so is the tendency to remain unhappily married to 'suboptimal’ ideas.

Accuracy

Deciding what to test with the AI prototype is important. Rapid prototyping is particularly unsuited to evaluating an idea where the performance of the AI model is a central, but uncertain component. In other words, if the product will be useful only if the performance exceeds a threshold of which we cannot be certain in advance.

Additionally, if judicious error-handling is fundamental to the product, then a prototype that doesn't address a representative range of errors will be invalid. This is particularly the case if certain long-tail errors are catastrophic resulting in a corrupted user experience and destroyed trust.

Users may love the happy path of a prototype omniscient AI, but if that is not what will ultimately be delivered, the prototype is little more than a toy.

— — —

What to do about it.

Deciding what to test in AI prototypes is central to a well-defined AI process. Splitting between a technical proof-of-concept that assesses performance and a user-focused prototype is a straightforward way to remove uncertainties around accuracy. Of course, this comes with a commitment to invest in the technical PoC prior to understanding user consequences.

To constrain the cost and time of the technical PoC, limiting it to a sub-section of the data may be useful. This PoC can then form the backend to a series of front-end rapid prototypes. Examples where this can work well include building for individual verticals of e-commerce sites, or a subset of use-cases in voice interactions.

Wizard of Oz prototypes are a favoured method of mocking up AI systems. To assess the impact of unexpected AI behaviours or algorithmic mistakes, purposefully including errors into the prototype is a good starting point. Listing out possible mistakes that the algorithm could make allows us to examine the types of reactions these errors can evoke in users. Of course, this will never be exhaustive and does not protect from unknown unknowns, but it is a substantial improvement from the over-optimistic AI magic that is typically tested.

— — —

To embed UX processes into the design of AI systems remains one of the central challenges to building human-centred AI products. There is an abundance of opportunity to build new tools to bridge the divide between designers and data scientists. And the products we put in the hands of our users.

Sources & inspiration

Re-examining Whether, Why, and How Human-AI Interaction Is Uniquely Difficult to Design by Yang et al.

— — —

The Design Difficulties of Human-AI Interaction

Designing for Human-AI interaction is hard.

Here Yang et al. catalog where designers run into problems when applying the traditional 4Ds process to designing AI systems. These difficulties can be broadly attributed to two sources:

Uncertainty surrounding AI capabilities

Output complexity of AI systems.

This uncertainty and complexity combination then manifests throughout the design process.

4D difficulties in designing with AI

Of course, these pain-points are not insurmountable. Rather they highlight key areas where attention should be focused to ensure that bad design outcomes do not creep in.

For example, despite the challenges, AI prototypes really can help.

If you’re looking for fertile ground to explore in AI innovation, look no further than these design problems. Not all AI advances are algorithmic.

Sources & inspiration

The Bullshit-Industrial Complex

Bullshit has never been in short supply.

It is a natural-occurring byproduct of human societies. But the forces that have given rise to exponential growth in digital communication are fuelling a human bullshit mega-machine.

We are living in the midst of a bullshit epidemic.

This is clear to see in the creative world and it’s been bothering Sean Blanda. Back in 2017, his inbox at 99U was swamped by a deluge of bullshit artists.

Instead they choose to quote other people who quote other people and the insights can often be traced back in a recursive loop.

Their interest is not in making the reader’s life any better, it is in building their own profile as some kind of influencer or thought leader.

He describes the ecosystem. The Bullshit-Industrial Complex is a pyramid of groups that goes something like this:

Group 1: People actually shipping ideas, launching businesses, doing creative work, taking risks and sharing first-hand learnings.

Group 2: People writing about group 1 in clear, concise, accessible language.

—— Bullshit Maginot Line ——

Group 3: People aggregating the learnings of group 2, passing it off as first-hand wisdom.

Group 4: People aggregating the learnings of group 3, believing they are as worthy of praise as the people in group 1.

Groups 5+: And downward….

The astute reader will recognise that this piece is written from below the Bullshit Maginot Line.

This creates a polluted information ecosystem. And its worsening.

The rise of the infinite meta-aggregators is self-perpetuating. When content is already infinite, curation trumps creation. More so, when content quality is poor.

The rational response to a torrent of bad content is a counter-wave of aggregated, second-hand, filtered content.

Much of this will itself be bad content.

A side-effect of the Text Renaissance is the parallel rise in Bullshit production. The means of production have been democratised, and Bullshit is an easy on-ramp.

Incentives

This is a problem worth solving and the Platforms have tried to adapt.

Notably Medium, with its well-intentioned attempt to give writers a viable source of engagement-driven, non-ad-based income. Nevertheless, many canonical examples of Bullshit content remain front and centre on Medium.

Substack, where this is published, puts the decision-making back into the hands of the reader. You are the arbiter of Bullshit. One click is all it takes to unsubscribe and banish the bullshitter into email oblivion.

And yet the incentives still encourage the Bullshitter. The Algorithms still have some way to go.

The Self-Aware Bullshit Artist

That’s the ecosystem, let’s look at the individual.

Is it possible to recognise whether your writing is polluting the information ecosystem?

My own experience of bullshit artists is that they are painfully unaware of their plight ... or more to the point, to our plight.

Here’s a flow diagram I mocked up to keep myself honest.

You’ll notice that there’s large gaps for subjectivity to slip through unnoticed. And so that leaves us with a distinctly unsatisfactory conclusion.

Bullshit is in the eye of the beholder.

Mastery, Norms and Self-Policing

So what’s a boy to do?

Feedback is central to developing a writing and thinking muscle-pair. Feedback sparks conversations, self-reflection, and correction. It also keeps you accountable.

Just ship it.

Or so the mantra goes. The default online writing advice is to publish early and publish often. And so the bullshit deluge continues, and the information ecosystem declines.

Paul Graham points to another path:

If you write a bad sentence, you don't publish it. You delete it and try again. Often you abandon whole branches of four or five paragraphs. Sometimes a whole essay... With essay writing, publication bias is the way to go.

He characterises writing worth publishing, thusly.

Useful writing tells people something true and important that they didn't already know, and tells them as unequivocally as possible.

Again, there’s plenty of room for subjective interpretation here.

Useful writing, too, is in the eye of the beholder.

— — —

So should I publish this?

It depends.

I’ve traversed the bullshit checklist but I’ve not agonised through one hundred iterations on the path to essay-worthy.

Instead, I’ll take a side-road. This is posted as a short-thought, a vehicle for consistent online writing. It’s low risk, minimally intrusive and yet still ‘out there’. Once I’m comfortable with that, and if it receives the thumbs up from one or two close collaborators, you’ll see it in The Deployment Age.

As for the ecosystem problem.

Hopefully, the spread of norms like this will dampen the inexorable production and propagation of bullshit. But I’m not optimistic. The incentives are misaligned and enforcing nuance at Platform-scale is incredibly difficult.

We’ve got Platform-scale problems that demand Platform-scale solutions.

And the information ecosystem has begun absorbing three new hyper-polluters: Fake News, AI Generated Content and Deepfakes.

File these under algorithmically under-determined.

— — —

Sources & Inspiration

How to write usefully by Paul Graham

The Creative World’s Bullshit Industrial Complex by Sean Blanda

An alternative to the bullshit industrial complex by Tom Critchlow

The Practice by Seth Godin

On Bullshit by Harry Frankfurt

A Text Renaissance by Venkatesh Rao

Exploring the Intuition Spaces of Others

John Salvatier has an interesting article on what he calls the '‘I Already Get It’ Slide, a cognitive bad habit that prompts you to gloss over the reasoning behind conclusions with which you are already familiar.

He describes the consequences:

This is unfortunate because the error prevents you from actually absorbing other’s intuitions, and absorbing other’s intuitions is important for doing anything hard.

In the article, John talks about a situation where his intuition was in fact incorrect, but there’s a more general learning here.

In innovation and research, it is not just the conclusion that is important, but the steps that led to the conclusion. And before that, there’s the spark that gave rise to the steps themselves. There are often multiple paths to the same destination.

Translating this to idea evaluation has profound implications on exploring a concept or venture.

There is the visible argument - what’s presented - and behind that is a hidden intuition space. The entire Intuition Space will never be fully legible. Intuition is difficult to communicate. What’s interesting is that some of this Intuition Space is actually visible to us if we avoid jumping to ‘I Already Get It’ mode.

There is plenty to be learned from ideas with which we already agree.

The tendency is to not only focus on conclusions with which we already agree, and the reasoning behind them. But to assume that we know the intuitions that led to the reasoning in the first place.

Collecting intuitions for ideas looks like fertile ground for idea exploration.

A promising new hobby.

— — —

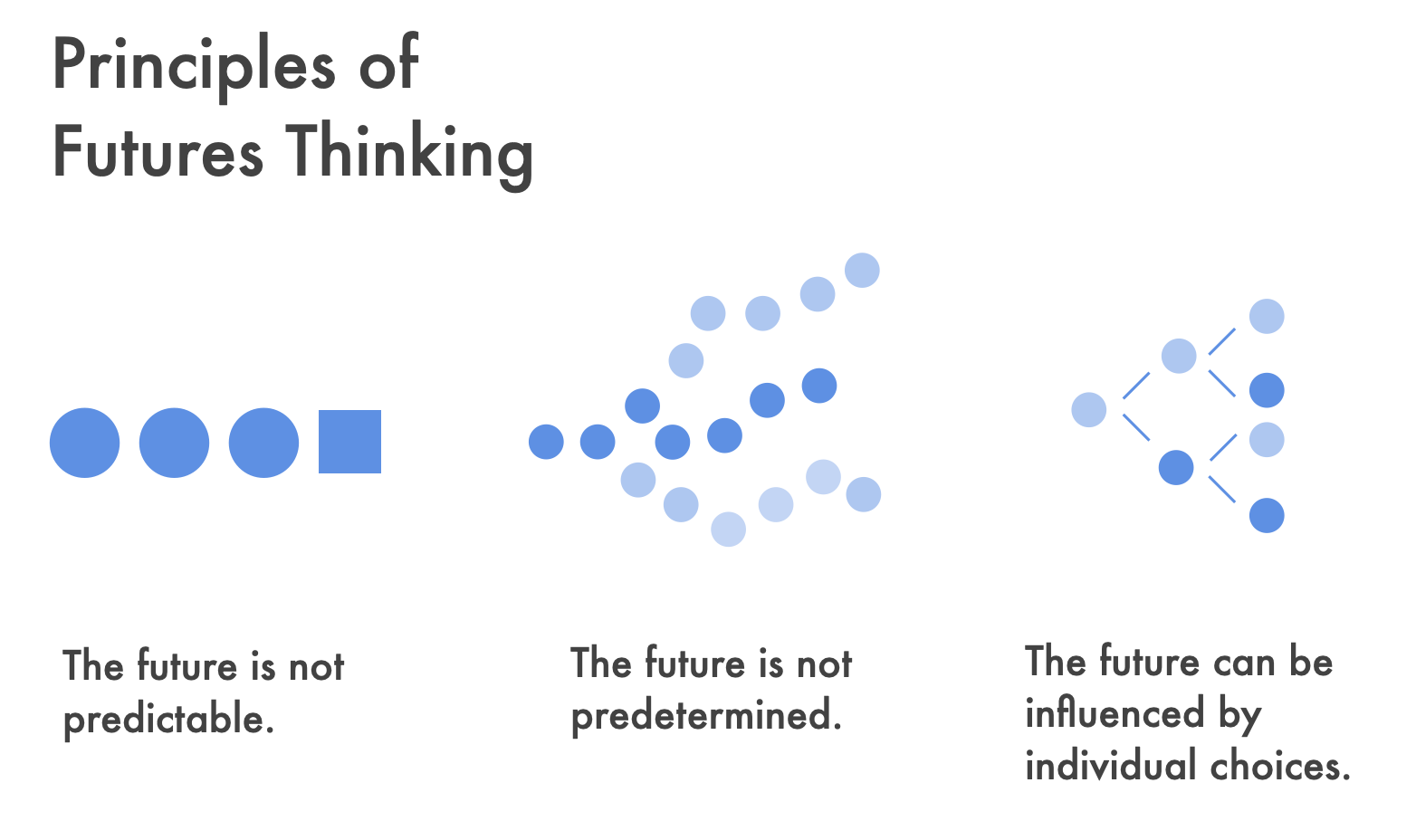

Principles of Futures Thinking

I've been thinking quite a bit recently on some core principles of Futures work that Roy Amara laid out in 1981.

These principles crisply point out why Futures work is possible. They outline the space in which Futures Thinking can take place. Within that space, Future thinking can be systematic and rigorous. It can materially impact what future we will live in.

Most skeptics get hung up on Principle 1. The future is not predictable.

If the future is not predictable what’s the point in speaking about it? Is it not better to build and see what works? I was firmly in this camp for 10 years. Uncertainty is uncomfortable. Guaranteed uncertainty is almost unbearable.

Thankfully, Principles 2 and 3 act as soothing balm to the harsh burn of skepticism.

The future is not predetermined. It is not inevitable. While it is impossible to predict, there is room to change what future we arrive at. Exploring what could happen is a useful first step on that journey.

The real relief comes from Principle 3. The future can be be influenced by individual choices.

Small actions can make a difference. The future is influenceable; and it is influenceable by seemingly small contributions.

Individual choices matter.

— — —

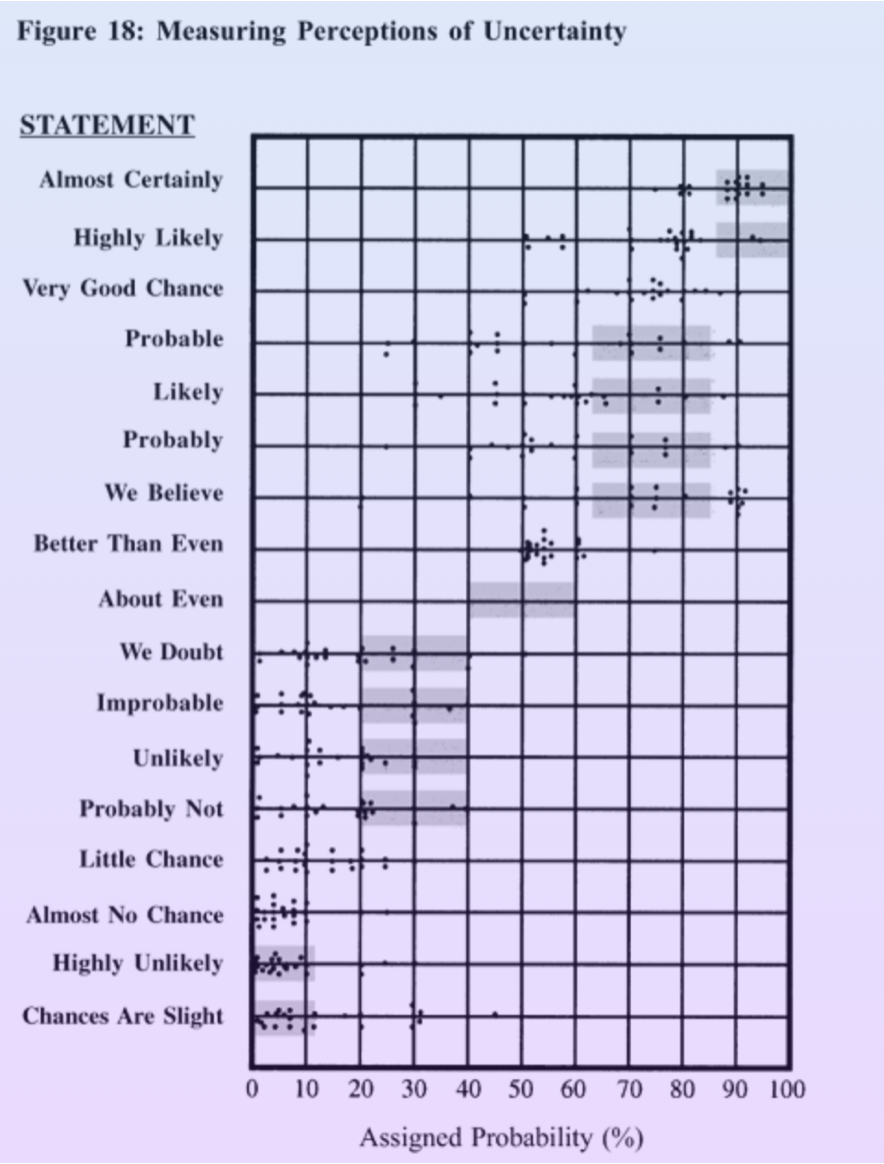

Levels of Uncertainty

We are all forecasters.

We have expectations about how the future will unfold; these expectations are forecasts.

The future is unknown and uncertain. And yet, each possible future is not equally probable. Our forecasts accept this fact.

It is non-trivial to assign probabilities to events arising from a complex world. More so, with very limited information. Our brains are not designed to assign specific probabilities to events.

Speaking about uncertainty is difficult.

Nevertheless, our language contains a host of terms to describe uncertainty. To give some indication of our conviction. Probably, Likely, Almost Certainly, Believe, Unlikely, Little Chance, amongst others.

We all know what these terms roughly mean.

But if action in the real world is downstream of these vague assessments, it is worth knowing what exactly do these labels mean and what can we therefore infer about the future.

The CIA certainly thought so. The image above is taken from a survey of 23 NATO officers carried out by Sherman Kent in the 1960's and published in the 1977 Handbook for Decision Analysis (Barclay et al.).

We roughly agree about what these terms mean, but we are not precise.

As communicators, humans are imperfect. Our thoughts get lost in translation.

When we encode information in language, the words we speak (or write) is an approximation of the intended meaning. When those same words are heard (or read) by the audience, the decoding process introduces further drift from the initial intent.

Lossy communication is the rule of language.

Has this understanding of our language of uncertainty changed in the 50 years since? Reddit user zonination polled 46 other Reddit users and came to strikingly similar conclusions - beautifully visualised.

In listening to the forecasts of others, not only is the future uncertain. There is uncertainty in our interpretation of their uncertainty.

— — —

Sources & Inspiration

Handbook for Decision Analysis by Barclay et al.

Zonination on Reddit

Superforecasters by Philip Tetlock.

AI prototypes make Data Products tangible

An under-looked aspect of Data Products in general and AI products in particular is that they are often 'invisible'. They lack a tangibility - something to touch, something to see. This is an unrecognised barrier to good Product decision-making. Reasoning about the invisible is difficult. It's imprecise.

Prototypes make the intangible real.

They help with product decision-making, with communication, with building stakeholder buy-in, and with customer sales.

Invisibility

AI products often take the form of automating a process, removing something concrete and abstracting it into the automated ether. For customer service, this might mean removing a human from the customer care user journey. For email marketing it might mean replacing the need to manually set up an email campaign with a triggered system. In payments, it might mean removing explicit payment decisions and allowing your fridge to re-order milk when it runs out.

In other cases, the AI product might simply be an optimisation whose benefits are only observed in the aggregate. For example, customer segmentation that drives more effective advertising. Or more relevant product recommendations that drive clicks and ultimately sales.

Injecting artificial tangibility.

With these examples in mind, it is clear that invisibility adds a layer of complexity to the already tricky act of product decision-making.

More so, when no single individual has the complete picture of the product and its integrations. Complex product development environments, with many touch-points, moving parts and integrations are breeding grounds for confusion; something that is exacerbated when a concrete understanding of the product at hand is lacking.

A prototype gives a focal point around which discussions can centre.

This is true, even for exclusively internal stakeholders. On three separate occasions in the past two years, investing time in building a throw-away prototype has been the verifiable differentiator in accelerating progress and securing funding for AI initiatives for my team.

As humans we are well equipped to run simulations and scenarios in our head but this is an exercise that benefits greatly by pinning down some of the uncertainty. Injecting tangibility to the conversation augments our reasoning. It harnesses our ability of thinking by making.

Importantly, AI prototypes help stakeholders who are less familiar with AI to better understand what exactly the product does. It forces feedback on potential obstacles and accelerates buy-in (or rejection) from customers and gate-keepers.

AI is still new territory for most. Grounding conversations in the tangible is an unreasonably effective tool for avoiding the twin perils of 'AI can do it all' and 'AI is too complicated'.

Machine Learning and the evolution of extended human capabilities

Machine Learning is an extension of the toolset that humans have invented to create change at scale in the world around them.

A coarse view of the Evolution of Technology shows the development of humans using more energy and more information more effectively to expand the capabilities of individuals and groups. These are the twin effects of Leverage and Automation.

There have been four big stops on this journey so far:

The rise of machines that could work on our behalf.

The rise of mass production, that greatly accelerated the precision and scale of machines that could work on our behalf.

The rise of software that could make decisions on our behalf.

The rise of AI, that greatly accelerates the precision and scale of decision-making that can be taken on our behalf.

This evolution of extending human capabilities can be further divided between technologies whose key innovation is to build in the physical world (move atoms), and those that make decisions (move bits). Note, moving bits comes with the bonus that it can be coupled with machines to move atoms.

The rise of atom-moving technologies was an evolution from manual tools to harnessing Steam and then Electricity to do more work.

The rise of bit-moving technologies was the harnessing of digital information, etched into silicon to do computation on our behalf. The first wave of implementations needed to be explicitly programmed (software). The latest wave, allows the machine to find patterns itself (machine learning).

Machine Learning (even pre-AGI) promises massive leaps forward forward in the outsourcing of human thought and the use of leverage in human action.

Software is eating the world, and AI is eating Software.

Fallibilism and Product Management

Fallibilism is the idea that we can never be 100% certain we’re right and must therefore be always open to the possibility that we’re wrong. This relates to our understanding of the world - our collective basic, scientific testable beliefs. In Product, there's interesting parallels.

In Product, it's obvious that we don't have perfect knowledge and that certainty is beyond foolish. Clearly, our beliefs about a market opportunity, about the details of a product and what form and function will work are all highly uncertain.

Yet, even within our most open-minded Product plans, there are 'certainties' baked in. Core assumptions, that if false, risk collapsing any possibility of success.

As Product managers, we are all fallibilists.

A Fallibilist filter of Product then, has lessons:

all Product beliefs are imperfect. They may be wrong, or superseded. This is what spurs the generation of new alternative hypotheses and the search for next Product iteration.

even near perfect products are only near-perfect at one point in time. The world is a complex, dynamic system. Everything changes.

all Products are tentative, provisional, and capable of improvement. They can be, and have been, improved upon.

in the quest for product improvement, admitting one’s mistakes is the first step to learning from them and overcoming them.

Thankfully, much of this is now part of the Product canon.

Failed tests and new opportunities

Perhaps the most interesting lesson from Fallibilism is the notion that although a Product hypothesis may not be correct, this doesn’t imply that it must be entirely wrong.

A 'failed' Product test should not condemn a Product to an unquestioning, immediate grave. There may be kernels of useful truth within. I've too often seen reasonable, justifiable Product decisions thrown out in the zealotry of Lean or Design Thinking-infused reviews. The data says no - therefore, kill it now. This is a crying shame.

Failed Product tests contain information about what works.

Personally, I've found this lesson most useful in looking at the Product failures of others. It's easy to see the merit of your own failed efforts; harder to see in others.

Examining failed (or discarded) Product initiatives, in a generous light is fertile ground for strong Product ideas. It has the added benefit of coming with a ready-made patron or team who will jump at the chance to have their Product (or part of it) resurrected.

Rather than treating a failed Product test as an example of what not to do, ask instead - if we run another experiment, what parts of this do want to repeat.

Adaptive preference and shrinking Product Vision

An adaptive preference results when we modify aspirations toward expectations in light of experience.

We come to want what we think is within our grasp.

This is true for individuals and it is true for individual decision-makers within an organisation. It is true for groups and group decision-making.

As humans, we tend to downgrade the value of previously desired outcomes as their realisation becomes less likely and upgrade the value of previously undesired outcomes as their realisation becomes more likely.

The mind plays tricks.

The implications for Product Management is multi-fold:

Product vision downgrades when it meets obstacles.

Obstacles can arrive as market and customer reality - how desirable is this to customers.

Obstacles can arrive as organisational or political reality - how hard it is to convince others to test the vision against market obstacles.

Internal negativity, stagnation and politicking can not only kill good, viable product visions but worse, make the organisation believe it never even desired those audacious visions in the first place.

Market Reality

Adaptive preference in the light of early negative customer feedback shapes the product vision toward something that is more immediately desirable to the market. Typically, this shrinks the audacity of the product vision.

This is the tension between a valid reality distortion field and the cold, hard truth of customer feedback.

Some of the world’s greatest products faced an uphill struggle against the negativity of initial customer feedback - the car, the computer, the mobile. So too, some of the world’s worst. Navigating that tension is the bread and butter of the Product Management literature.

Organisational Reality

Less explored, are the countless, daring product visions that were hamstrung internally long before the market got a look in. And the organisational story-telling that whirred into action to rationalise why ‘we could never have done it, anyway’.

Obstacles introduced by the organisational reality not only make it drastically less likely that we achieve the possibilities of a future-facing product vision, they prevent that vision from first-contact with the market. Then bury the evidence in the organisational consciousness - we train ourselves to not want daring product vision.

If we’re going to succumb to adaptive preference of our product vision, let it be led by feedback from reality. Not by unnecessary internal obstacles that make us aim lower than market reality would demand.

Deciding what to test in AI prototypes

Deciding what to test is the first, and most important, step in defining an AI prototype. This decision shapes all other decisions in designing the prototype.

Defining the hypothesis under test is important because prototypes are messy. And messy experiments give muddled results; hiding the relevant amongst the incidental.

Prototypes are broad brush-stroked approximations of the final product. The learnings from a prototype can be game-changing, intriguing, and wholly surprising. But to learn from a prototype with confidence, the effect or insight will need to be large.

It is very easy to take a finding from a prototype and generalise it, only to later find that the learning was tied directly to some imperfection in the prototype itself. Minor differences between the prototype and end-product can and do impact the learnings. Details such as how fast an element loads, or being constrained to a few user journeys have very real effects on how the user responds.

With prototypes, we're looking for big effects. Things that are obvious once our attention is drawn to them. Not optimisations. For optimisations, do this later in the design process and consider A/B or multi-variate testing on large user groups.

With many elements under test, the feedback will be noisy. It is difficult to untangle the causes and effects of what our users tell and show us.

The types of things we might want to test include:

The technical details

The performance of the model.

The speed of delivering the model results.

The rate of feedback from a model and whether a user can visibly ‘teach’ the system.

The interface

How interactive is the AI feature.

Are there separate elements for the AI feature; how are these delineated from the rest of the system.

The messaging

Explaining the AI algorithm; what it does and how it learns.

Teaching the user how to make the product learn.

How numeric the model results are; how numerate is the user expected to be.

Whether and how we communicate error messages.

Error correction

How to put fail-safes in place in case of error.

How to determine if the model has broken down.

What we do when the model breaks down.

How to recover from catastrophic error.

Separating these tests is important. For testing the user impact of technical details it is best to have arrived at a finalised design for the interface, messaging and error communication.

Messaging is closely tied to the interface and error-handling and often won’t be tested alone. Instead, the interface and messaging or the error-handling and messaging will be tested in pairs.

The important thing to bear in mind is that we don't want to be rapidly swapping these permutations in the hope that we’ll observe fine differences in user responses to help us determine the optimal combination. With small user groups the results will certainly not be statistically significant, nor usually generalisable and relevant.

Instead, choose a configuration with clearly defined upfront assumptions and observe whether the user behaves as expected, and if not, why not.

— — —

Signalling in Innovation Management

No matter the context, there will never be enough resources to do everything.

This can be especially evident when it comes to managing Innovation Pipelines. The 'new' and the ‘possible’ is a very large search space. There is simply no way to swallow and digest the entire buffet of options. So decision-making needs to be applied, to weed out the ideas with the least potential. Typically this materialises as an Innovation Pipeline with validation stage-gates ranging from concept to experiments to Pilot.

Restricting what ideas move forward toward launch and commercialisation is a necessary, sensible practice. How these ideas should be restricted is always imperfect and often debated.

A less appreciated factor is the impact of this decision-making upon what ideas enter the pipeline in the first place.

We like to think that ‘good ideas will win out’ and idealise inventors and innovators as creative mavericks, driven by vision and creativity. They become infatuated with an idea or concept and push this toward reality.

Sometimes.

More often they are human, reasonably rational creatures who like the feeling of success. They want to work on things that have impact. They want to be seen as innovative.

We are forecasting animals. Stage-gating decisions are observed. We learn from what gets through and what gets rewarded.

Gate-keeping is a signal generating function as well as a filtering function. Decision-makers not only decide what ideas get to market, they create the environment from which ideas are drawn.

So there are two major considerations in the management of early stages of in-company innovation.

Are we creating the right environment for the collection of ideas and concepts that extend our existing business lines and find new adjacent business opportunities.

Are we nurturing the 'right' ideas at this early, vulnerable stage to show them in the best possible light.

— — —

Avoid ‘Big Bang’ Delivery

30 years into the software revolution, we've learned a thing or two about what works and what doesn't. 'Big Bang' Delivery falls squarely into that second category.

This may be entirely obvious to you, having learned the hard way or simply accruing the learnings of others through the adoption of Lean, Agile, Continuous Delivery, CICD or some combination of those. It's worth reminding ourselves why ‘Big Bang’ Delivery doesn’t work.

The failings of 'Big Bang' Delivery can be summed up in four ways.

No feedback, no course correction.

Expectations rise, rapidly.

It's easier to measure small things than big things. Estimations and forecasts go bananas.

Concentrated risk - all your eggs are in one big, fragile basket.

Related to these, there's increased deployment risk, and lack of believability of progress for key stakeholders.

It's not clear to me what the relative weighting of these factors is, or even whether the relative contribution of these factors are consistent across projects. I am confident that they each contribute to the likelihood of failure. If I had to put money on one factor over another, it would be that feedback is vital. And shielding a project from early and regular feedback is a sure-fire way to deprive it of the information necessary to help it evolve, adapt and grow.

A final point about 'Big Bang' delivery. 'Big Bang' delivery is an indication that things are going badly. Teams that know better, deliver continuously.

Teams that know better but have run into difficulty (technical, coordination, political) drift towards Big Bang.