Minimal Theme for Roam Research

Roam Research is the leading light in a spate of emerging note-taking and thinking tools that aim to move beyond the constraints of traditional text documents.

This text renaissance is moving us towards new writing tools that better support the capturing, creation and sharing of human thought. Roam is en-route to implementing a near-full conception of hypertext as originally imagined by visionaries like Vannevar Bush and Ted Nelson. Simply put, Roam allows easy referencing to blocks of text and introduces bidirectional links between pages and blocks of text. On the surface this might not sound like much, but a few sessions using the tool quickly illustrates why Roam’s early adopters are so cultish.

I’ve been playing with Roam on and off since December. This tearing up of the write -> structure -> organise -> refactor -> bundle/unbundle flow has had a marked change on how I write, how I think and how I remember.

This past month, I’ve re-committed to using Roam daily in place of Evernote and Bear. Being a sensitive snowflake, however, the UI bothered me. Roam recently, opened up the ability to customise the look and feel, so I created this design (below).

Double-pane view

The design intention is to be minimal, clear and to remove visual distractions introduced by backlinks, external links and bullet-guides. The design is geared around the two-pane view or two-pane view with sidebar.

Tags are designed to be visually unobtrusive and a secondary feature. The exception here is where tags are commonly used and actionable. Specifically, TODO, to read, to process, to write.

Single-pane view

Highlights are designed to be visible but not jarring and fit the colour scheme of the design.

The CSS can be found on GitHub here.

— — —

Prototyping is Thinking through Making

The human mind did not evolve in a glass jar. We are social animals that like to move. We think on our feet, and in conversation. We learn by watching, copying, trying and testing. In other words, us humans do our best thinking through interaction.

When we speak, when we write, when we make, we do our thinking external to an isolated mind. Our cognition is embodied and extended with the help of our tools and our environment. We do our thinking, with things, in the world.

Prototyping is thinking by making. It uses each of these benefits, to expand the quantity and quality of our thinking.

Prototyping is the simplest way to make. It is easiest way to unlock 'thinking by making'. Low-fidelity prototyping requires near to no pre-defined skills. But it offers three benefits.

firms up our thinking, giving us details to explore.

gives us new ideas as we explore.

creates something that we can test with users.

Thinking by testing is an incredible benefit of prototyping. By introducing real users into the design process at the earliest possible stage we open our thinking to the people who'll use the end product. This physical conversation is useful for testing and validating the assumptions of our core concept and broad design details.

It goes further than this. It creates inputs from the people who matter - in a medium that allows them to give meaningful feedback.

In other words, we are extending our own thinking by recruiting users to think with us.

— — —

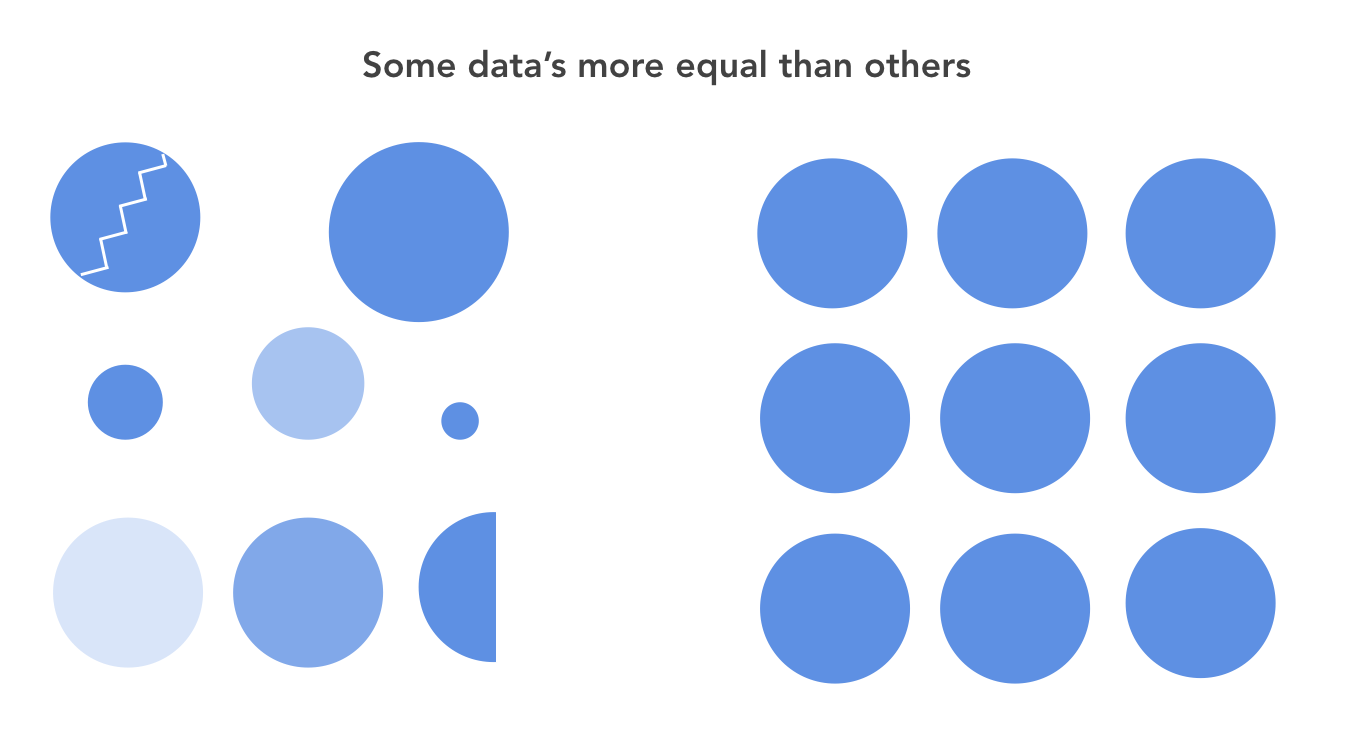

Some data’s more equal than others

As machine learning has matured, ML algorithms have become commoditised and therefore it is the data that is valuable. Or so the argument goes.

This has a nice ring to it. It sounds true.

It has the convenient benefit of aligning with the strengths of many pre- or early-internet era incumbents, and neutralising their weaknesses in ML. That alone should give you pause.

Data is a briefcase word. It means many things.

The data that matters for that machine learning application you have in mind only partially overlaps with the data you've been passively collecting.

Where the data does overlap, the data is messy, incomplete, inaccurate. Even the flashiest algos will struggle under these conditions.

The data is probably difficult to access. Like all product development, machine learning products are powered by iteration and experimentation. Without easy access, your use of data will be pedestrian.

To be an AI powerhouse is to be a data powerhouse. And that means having an insatiable appetite for hoovering up all kinds of data, at any and every opportunity. It means finding new ways to digitise information flows that didn’t previously exist. And to build walls around that data collection.

This appetite, married to algorithms that create feedback loops to the data itself (inferring from the data, added to the data, correcting from the data, using the data as labels) is what builds a data flywheel.

A data flywheel is made up of lots of data. Lots of data is necessary but not sufficient.

So when you say "it's not about algorithms, it's about data", can you please explain what your data looks like.

— — —

Affordances and AI

Affordances are the potential actions that a product makes possible.

A chair affords sitting. Clearly. It also affords standing on it, waking from Inception-dreams and lion-taming. These potential actions are easily discoverable only if they are clearly perceivable to the user.

This creates an interesting puzzle for designing AI products. The 'magic' of AI is to outsource decision-making and automate it away.

How then is a user to know what the product can do?

Typically we rely on two sources for discovering affordances:

signifiers such as labels, sounds, and shape.

convention - understanding how a product ‘should’ be used.

Signalling the affordance of AI features is especially important today. As AI products are relatively new - signifiers increase the likelihood that the AI capability is used. Importantly, they also ensure that the AI capability is not accidentally triggered.

As we emerge into a world with increasing AI capabilities infused into previously 'dumb' products, we are faced with a further challenge. How to balance the convenience of 'invisibility' and explicitly making clear what the system can do.

The tendency is to squeeze the most possible convenience from the AI capability. It is very tempting to automate away those human interactions. However, our existing AI systems introduce errors that make this approach risky. Imagine a chair that crumbled 5% of the time you sat on it. I have one. It’s not enjoyable.

For systems with even moderate error rates (i.e. most systems for the foreseeable future) this balance should lean heavily toward explicit signalling.

Conversely, false affordances present another difficulty. Introducing AI into a product sets expectations of what is possible with the product. Siri invites voice interactions, yet try to speak to her like a human and you’ll quickly find yourself frustrated.

We have entered an age where AI products and features are gaining mainstream adoption. Conventions and best-practice in the use of AI are beginning to emerge. The decisions that designers collectively take now will shape how Human-AI interaction proceeds for the coming years.

Err on the side of explicit instruction until we’ve learned what can reasonably be expected of AI. Or until such time, that the AI can confidently adapt to our mistakes.

— — —

Doubt and Double-loop Learning

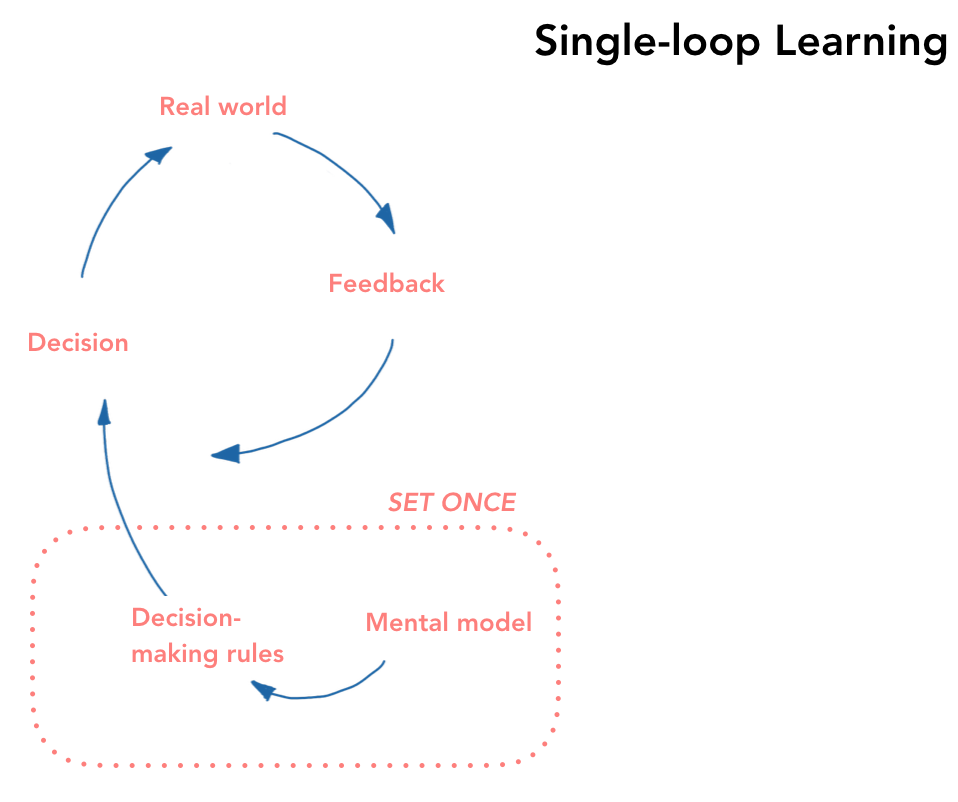

Most of us, most of the time, interact with the world through single-loop learning.

When we meet a problem, we fall back on our experience and tackle the problem from our mental quiver of skills and knowledge. We look at a situation, identify it as ‘one of those’ problems we’ve encountered in the past and get to work. We modify our actions if there’s a gap between the expected and achieved outcomes.

If something’s not going well, we try to fix the situation.

In reality, this learning loop will be sub-optimal.

It will have baked-in assumptions that may not be grounded in reality. The problem that we thought was ‘one of those’ may be an entirely different type of problem altogether. The mental model we’ve deployed to get the job done may be wholly unsuited to the task.

Double-loop learning aims to address that gap.

Double-loop learning recognises that the way a problem is defined and solved can be a source of the problem.

Instead of trying to fix the problem, we ask are we solving the right problem. Is our approach suited to the task. Do we have the right systems in place. Double-loop learning asks us to reconsider the problem in its broader context.

But double-loop learning can be hijacked.

Especially by the over-thinking mind. Doubt is a double-edged sword in learning. Doubt in the current plan of action is what begins to the journey to unlock better approaches. But plans of action take time to evaluate.

Over-rotating on the outer loop will lead to premature, invalid feedback. Unnecessary splashing and wave-making. Sometimes it’s better to keep the head down and see how far we get.

A good coach or manager gets you to stick the course until it is appropriate to rip-up the rule-book. A bad one will leave you questioning basic truths when you should be solving the problem at hand.

— — —

The Management Quantum Effect

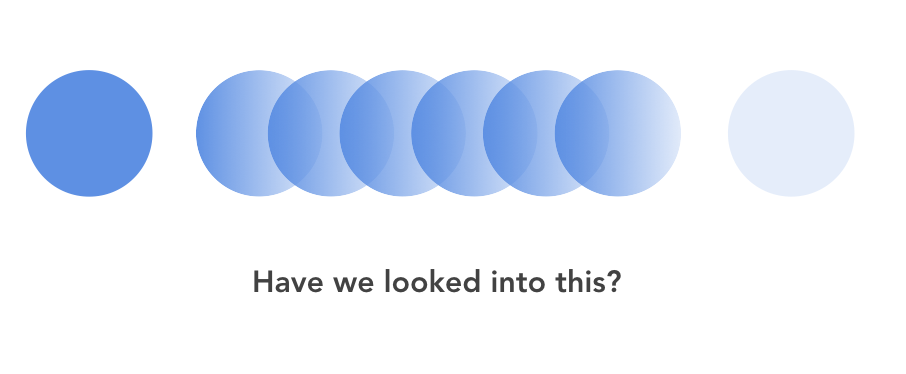

I’ve noticed an interesting effect in Management. Simply by asking a question, you change the answer.

Manager's should not underestimate the effect that their questions have on their team and what their team emphasises.

We are raised in a system where questions are tests. Directed questions are perceived as tests. We are also raised to acknowledge that tests deserves attention. Attention being finite - introducing new tests directs attention away from other focuses. It is a disruption.

The Management Quantum Effect materialises in two ways.

Many employees will scramble to provide an answer - usually the answer they predict you will want. This may not be the ‘true answer’ - the answer you would have received had you not asked the question.

The second is that there is work seriously deployed to interpret and come back with an answer. This franticness takes away from other work and so effects the whole system.

This leaves the manager who's in need of answers with a dilemma. To ask, leading to diversion and disruption. Or to remain in the dark.

Is there a third way? Eric Weinstein’s interpretation of quantum mechanics seem to offer one. He maintains that quantum systems arrive at certain, sensible answers if asked the right type of question.

For managers, what does the right question look like? That depends. Clearly signalling that the question isn't important tends to work well. It removes the testiness.

Unless of course, the intention is to force change in the system, by asking. Then, ask away.

Talkers are dangerous

Talkers are dangerous in an organisation.

Talkers don't do. Talkers waste time. Talkers talk their way into positions for which they are wholly unsuited. They lead the organisation astray.

But none of these are so bad.

The biggest danger that Talkers introduce to an organisation is complacency.

Talking creates the appearance of work being done. This appearance of work done creates an illusion of progress.

There is subtly here. Failing to make progress is not bad in and of itself. Deploying strategic inaction is a legitimate option.

Neither is it true to say that Talkers leading the organisation down the wrong path is unequivocally bad. Predicting the right path to take is tricky at the best of times.

Instead, it is the false impression of action that is dangerous.

The false impression of action has knock-on affects. It creates assumptions in other parts of the organisation. One such impression is that 'someone is working on this' and therefore there's no need for us to solve for this problem we've just noticed. Another is that we may be actively shut down because 'someone is already working on this'.

Talkers create artificial, fragile place-holders around a problem that prevent others in the organisation from solving that problem.

Talkers introduce fragility to the organisation by injecting false assumptions into the planning of dependent groups.

The effects of Talkers are not self-contained.

Because Talkers are good at talking, they are good at promoting their talk. Their ideas tend to spread. Because talk is cheap it is easy to do a lot of it. Updates can be frequent. This too helps their ideas spread.

As humans we are surprisingly unskilled at differentiating between secondary evidence of real, tangible progress and the appearance of progress. Surprisingly, Talkers who simply don’t know any better can be more detrimental than Talkers who knowingly practice the dark arts of peddling bullshit. A well-intentioned Talker does not signal deception.

It gets worse.

The mere fact that a Talker is talking, even if they lay little claim to ownership of action, can be counter-productive to progress.

And so we get to my suggestion.

The Hypocratic Oath for Talkers. First, let your talking do no harm. If you Talk about a topic you must point to action being done or take responsibility for the creation of action. A derivative of Amazon's rule of ‘if you notice a problem, it's now your problem’. The Talkers Rule states: ‘if you Talk about an opportunity it's your responsibility that the opportunity is achieved’.

Invention & Innovation

Invention and innovation are often confused.

They are lumped together as characters of a bigger story; a story of the battle between the old and the new.

But invention and innovation are distinct things. They require different skillsets and different approaches. Their objectives and outputs are greatly at odds.

Invention is producing something for the first time. It is a single component. A new solution to a technical problem.

Innovation is new way of making a system of technologies consistently work to solve a problem for users. It scales an ability so that it can have market impact.

To realise its potential, an invention must become something that works for everybody who needs it. It must scale.

Innovation is that path to scale.

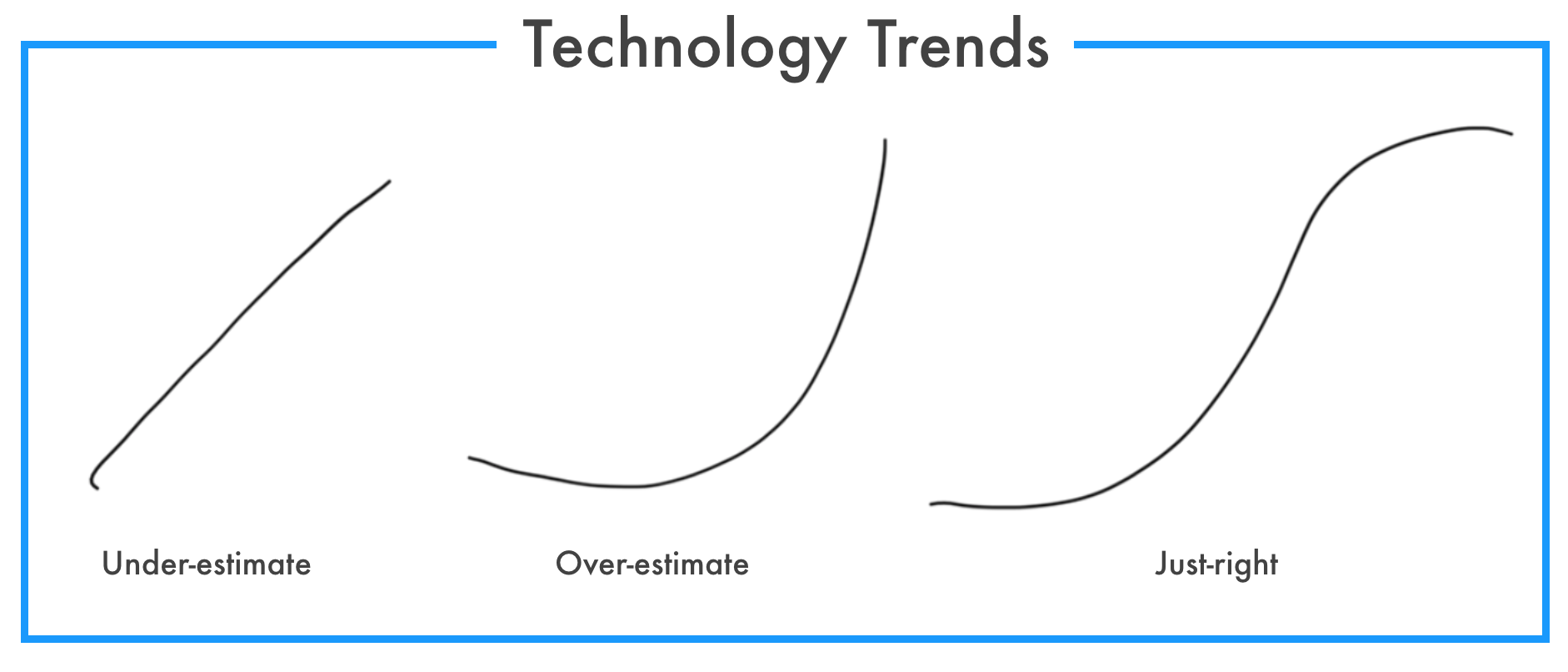

Trends: Pattern errors & extrapolation errors

It strikes me that there are two types of errors in trend forecasting.

The first are errors in imagining that a trend exists. These pattern errors mistake a smattering of signals for an identifiable trend. Pattern errors join the dots to draw a shape that isn’t there.

The second type of trend forecasting errors arise from misinterpreting the shape of the growth. These extrapolation errors mistake the shape of the growth of usage, adoption or development.

Perspectives on trends most commonly get lumped into two categories: linear growth or exponential growth.

Early in the lifecycle linear growth is likely. If a trend has picked up any kind of steam, linear extrapolation greatly underestimates its growth.

If a trend has reached that tipping point, exponential extrapolation makes sense. But looking at a new technology trend and immediately inferring exponential growth over-estimates the trends potential.

These two patterns are reconciled in the s-curve. The real difficulty is identifying where on the s-curve the trend currently exists.

Executives should change their minds

It's ok for executives to change their minds.

In fact it's good.

An executive who changes their mind can be reasoned with. Or at least persuaded. An executive who changes their mind incentivises others to bring new information and invites new arguments to recalibrate direction.

Changing their minds is not enough. Executives should change their minds; but they must articulate why they’re doing so.

A flip-flopping executive is worse than an immovable one. They are hard to predict. They are whimsical. At any given time you cannot know what they will resolve to do.

The decision is important. But so too are the steps leading up to the decision. Executives who change their mind, should show their work.

And while they’re at it, executives should acknowledge what they got wrong and why. It builds credibility in the new decision. And avoids the building of resentment in those who canvassed unconvincingly before the mind was changed.

Executives should change their minds. And explain why.

Short Thoughts

Short thoughts are brief pieces of writing that each focus on one little idea.

The concept is inspired by Seth Godin and Derek Sivers. Both encourage would-be writers to consistently share short pieces of writing.

Not every piece of writing should be an article.

Where an article is warranted, sometimes we don’t have all the pieces to make it compelling. And yet some things deserve to be put 'out there'. Sivers suggests we "present a single idea, one at a time, and let others build upon it."

This interweaves nicely with Godin championing consistency.

The drip. A post, day after day, week after week, 400 times a year, 4000 times a decade.

So here I publish short thoughts - longer than a tweet, shorter than an essay.

Sources